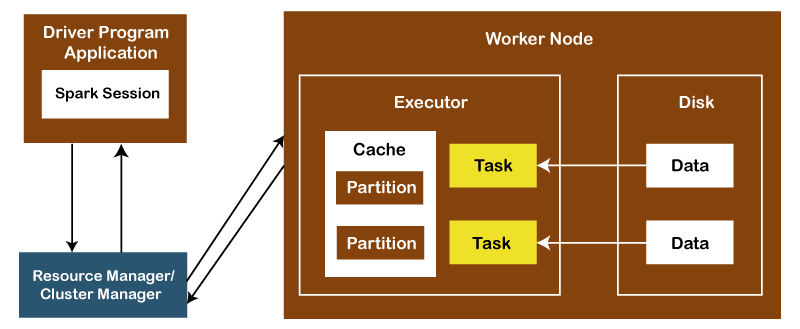

The Apache Spark applications run as independent processes coordinated by the SparkSession object in the driver program.

First, the resource manager or cluster manager assigns tasks to the worker nodes with one task per partition. Iterative algorithms then apply operations repeatedly to the data so they can benefit from caching datasets across iterations. A task applies its unit of work to the dataset in its partition and outputs a new partition dataset. Finally, the results are sent back to the driver application or can be saved to the disk.